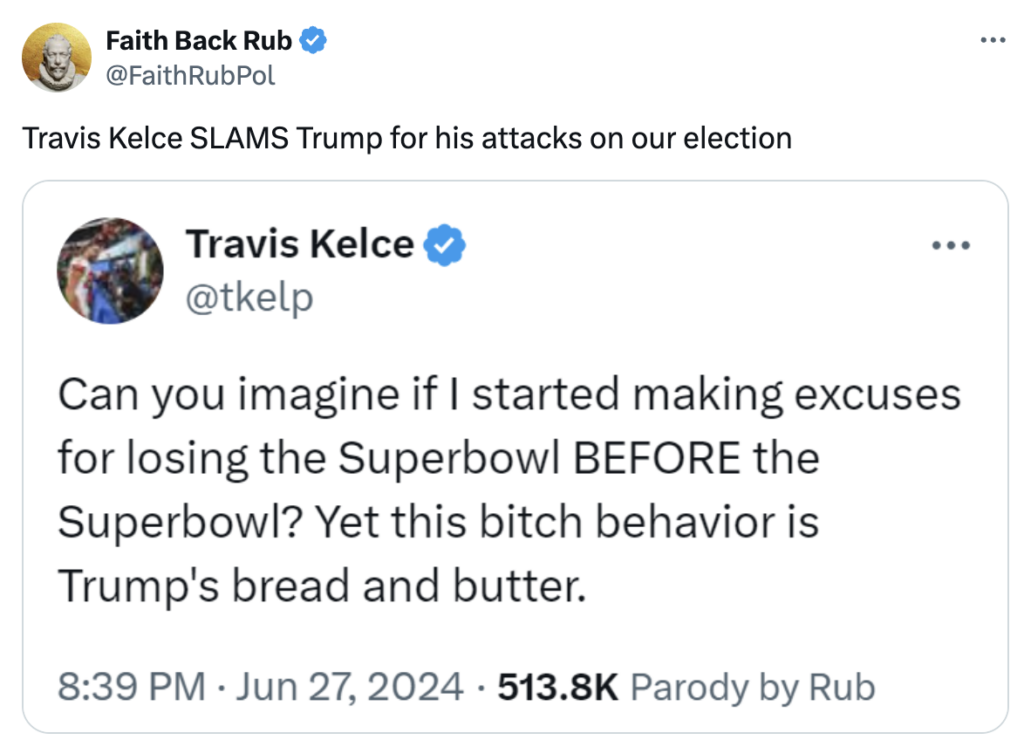

The opposite day I checked in on Twitter (Nonetheless can’t convey myself to say X) and noticed this tweet:

A few yr in the past, Twitter began injecting tweets into my “notifications” stream from folks I don’t comply with. So, I don’t know Religion Again Rub. By no means heard of the account earlier than. And but, Twitter’s algorithm one way or the other thought this was some of the vital issues for me to see that day.

The message I obtained was “a well-known American soccer participant slammed a presidential candidate.” After which I went on to one thing extra fascinating in my busy day.

However then I thought of it somewhat extra: this movie star American soccer participant is often non-political. He makes hundreds of thousands in product endorsements and podcast sponsorships. This assertion appears uncharacteristic. So I went again to the tweet and clicked on the precise Kelce message:

Now my response was — effectively, this can be a verified account. Appears like Travis Kelce actually did take a intelligent swipe at Trump. Shocking. However what is that this “Parody by Rub” factor within the nook? Is that this actual or not? Now, I needed to dig to determine what was happening. And right here’s the reality:

This didn’t come from Travis Kelce, however how would I clearly know that? Keep in mind how this confirmed up in my information feed: There was no indication that this was faux information when it was exhibited to me. I learn the headline and moved on.

Because it seems, most individuals who clicked by way of have been fooled by this tweet, although it was recognized as a “parody.” I do know this as a result of there have been almost 1,000 feedback on this tweet, most of them Trump supporters blasting Travis Kelce — who had nothing to do with this opinion.

And that is the true drawback with social media. The risk to our society doesn’t essentially come from what folks say, it comes from algorithms amplifying disinformation.

The implication of amplification

All people has a proper to say what they need to say, even when it’s incorrect or controversial. When the American Founding Fathers drafted the Structure, even essentially the most highly effective and compelling voice again then may solely hope that any individual would learn their pamphlet or hear a speech. Data unfold slowly, and largely, domestically. Even a juicy conspiracy idea couldn’t get nationwide consideration very simply.

However in the present day, damaging content material can unfold immediately and globally. And that places a brand new spin on the problem of free speech.

U.S. Supreme Court docket Justice Oliver Wendell Holmes famously mentioned there’s a restrict on free speech: “You may’t yell ‘fireplace’ (with no fireplace) in a crowded theater.” However in the present day, anyone can yell fireplace, and it will probably influence the opinions of a whole bunch, hundreds and even hundreds of thousands of individuals. Amplification issues. Amplication is the risk. Why isn’t anyone taking accountability for this?

Social media firms have to be accountable

Let’s assume by way of the case research I introduced in the present day.

- Twitter’s algorithm—no human being—determined to amplify information clearly marked as faux into person information streams with out indicating that it was a parody (the primary screenshot above).

- Primarily based on the feedback, two-thirds of the recipients of this tweet thought it was actual, or 342,000 folks.

- However that’s just the start. This faux information was retweeted 7,700 instances!

This instance was comparatively innocent. The parody tweet in all probability triggered Travis Kelce some irritation, however possibly that goes with the lifetime of a star.

Nonetheless, what if this amplified faux tweet was devastatingly severe?

- What if a “verified account” known as off evacuations in the midst of a hurricane?

- What if a faux account mentioned each pc was hacked and would blow up in the present day?

- What if the tweet accused Travis Kelce of beating up his girlfriend Taylor Swift?

My level is that Twitter and some other platform that employs algorithms to knowingly unfold false claims ought to be held accountable.

In a current interview, creator and historian Yuval Noah Harari made this comparability: Folks can go away any remark they need on an article in The New York Instances, even when it’s false. However amplification from social media firms is just like the newspaper taking a weird, false remark and placing it on the entrance web page of their newspaper.

That’s irresponsible and harmful to society. No person would stand for that. And but, we do.

Intention at amplification

As we enter the AI Period, the hazard of pretend information and its implications grows profoundly.

Let’s lower to the chase — Twitter knowingly lied to me to extend my time on their website and profit its backside line.

Whereas it will be almost not possible for any platform to observe the feedback of hundreds of thousands (or billions) of customers, it’s a lot simpler to carry firms accountable for spreading identified false info to harmless folks. This can be a easy first step to guard folks from harmful falsehoods.

Why is no one speaking about this? Addressing bot-driven “sensational amplification” is a a lot simpler repair than making an attempt to control or suppress free speech. This have to be a regulatory precedence.

Comply with Mark on Twitter, LinkedIn, YouTube, and Instagram