30-second abstract:

- The current actuality is that Google presses the button and updates its algorithm, which in flip can replace website rankings

- What if we’re getting into a world the place it’s much less of Google urgent a button and extra of the algorithm routinely updating rankings in “real-time”?

- Advisory Board member and Wix’s Head of search engine optimisation Branding, Mordy Oberstein shares his knowledge observations and insights

In case you’ve been doing search engine optimisation even for a short time, chances are high you’re acquainted with a Google algorithm replace. Now and again, whether or not we prefer it or not, Google presses the button and updates its algorithm, which in flip can replace our rankings. The important thing phrase right here is “presses the button.”

However, what if we’re getting into a world the place it’s much less of Google urgent a button and extra of the algorithm routinely updating rankings in “real-time”? What would that world appear to be and who wouldn’t it profit?

What will we imply by steady real-time algorithm updates?

It’s apparent that know-how is consistently evolving however what must be made clear is that this is applicable to Google’s algorithm as effectively. Because the know-how obtainable to Google improves, the search engine can do issues like higher perceive the content material and assess web sites. Nonetheless, this know-how must be interjected into the algorithm. In different phrases, as new know-how turns into obtainable to Google or as the present know-how improves (we’d discuss with this as machine studying “getting smarter”) Google, as a way to make the most of these developments, must “make them a component” of its algorithms.

Take MUM for instance. Google has began to make use of facets of MUM within the algorithm. Nonetheless, (on the time of writing) MUM shouldn’t be absolutely applied. As time goes on and primarily based on Google’s earlier bulletins, MUM is sort of actually going to be utilized to extra algorithmic duties.

In fact, as soon as Google introduces new know-how or has refined its present capabilities it can possible wish to reassess rankings. If Google is best at understanding content material or assessing website high quality, wouldn’t it wish to apply these capabilities to the rankings? When it does so, Google “presses the button” and releases an algorithm replace.

So, say certainly one of Google’s present machine-learning properties has advanced. It’s taken the enter over time and has been refined – it’s “smarter” for lack of a greater phrase. Google could elect to “reintroduce” this refined machine studying property into the algorithm and reassess the pages being ranked accordingly.

These updates are particular and purposeful. Google is “pushing the button.” That is most clearly seen when Google broadcasts one thing like a core replace or product evaluate replace or perhaps a spam replace.

In reality, maybe nothing higher concretizes what I’ve been saying right here than what Google stated about its spam updates:

“Whereas Google’s automated programs to detect search spam are continually working, we often make notable enhancements to how they work…. Once in a while, we enhance that system to make it higher at recognizing spam and to assist guarantee it catches new sorts of spam.”

In different phrases, Google was in a position to develop an enchancment to a present machine studying property and launched an replace in order that this enchancment could possibly be utilized to rating pages.

If this course of is “guide” (to make use of a crude phrase), what then would steady “real-time” updates be? Let’s take Google’s Product Evaluation Updates. Initially launched in April of 2021, Google’s Product Evaluation Updates goal at hunting down product evaluate pages which can be skinny, unhelpful, and (if we’re going to name a spade a spade) exists basically to earn affiliate income.

To do that, Google is utilizing machine studying in a particular means, taking a look at particular standards. With every iteration of the replace (equivalent to there was in December 2021, March 2022, and so forth.) these machine studying apparatuses have the chance to recalibrate and refine. That means, they are often probably simpler over time because the machine “learns” – which is type of the purpose relating to machine studying.

What I theorize, at this level, is that as these machine studying properties refine themselves, rank fluctuates accordingly. That means, Google permits machine studying properties to “recalibrate” and influence the rankings. Google then critiques and analyzes and sees if the adjustments are to its liking.

We could know this course of as unconfirmed algorithm updates (for the file I’m 100% not saying that every one unconfirmed updates are as such). It’s why I imagine there may be such a robust tendency in the direction of rank reversals in between official algorithm updates.

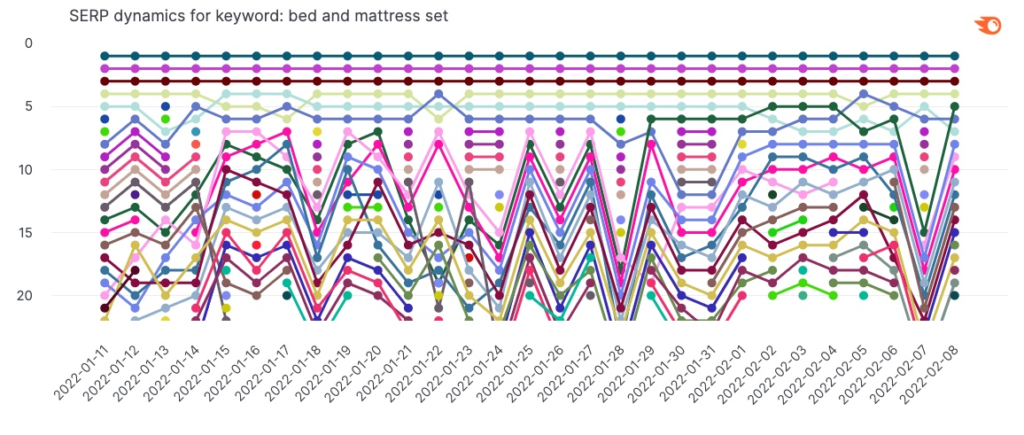

It’s fairly frequent that the SERP will see a noticeable improve in rank fluctuations that may influence a web page’s rankings solely to see these rankings reverse again to their authentic place with the following wave of rank fluctuations (whether or not that be just a few days later or weeks later). In reality, this course of can repeat itself a number of instances. The web impact is a given web page seeing rank adjustments adopted by reversals or a collection of reversals.

A collection of rank reversals impacting virtually all pages rating between place 5 and 20 that align with across-the-board heightened rank fluctuations

This pattern, as I see it, is Google permitting its machine studying properties to evolve or recalibrate (or nevertheless you’d like to explain it) in real-time. That means, nobody is pushing a button over at Google however fairly the algorithm is adjusting to the continual “real-time” recalibration of the machine studying properties.

It’s this dynamic that I’m referring to once I query if we’re heading towards “real-time” or “steady” algorithmic rank changes.

What would a steady real-time google algorithm imply?

So what? What if Google adopted a steady real-time mannequin? What would the sensible implications be?

In a nutshell, it could imply that rank volatility could be way more of a continuing. As a substitute of ready for Google to push the button on an algorithm replace as a way to rank to be considerably impacted as a assemble, this could merely be the norm. The algorithm could be continually evaluating pages/websites “by itself” and making changes to rank in additional real-time.

One other implication could be a scarcity of getting to attend for the following replace for restoration. Whereas not a hard-fast rule, if you’re considerably impacted by an official Google replace, equivalent to a core replace, you usually received’t see rank restoration happen till the discharge of the following model of the replace – whereupon your pages will probably be evaluated. In a real-time situation, pages are continually being evaluated, a lot the way in which hyperlinks are with Penguin 4.0 which was launched in 2016. To me, this could be a serious change to the present “SERP ecosystem.”

I might even argue that, to an extent, we have already got a steady “real-time” algorithm. In reality, that we a minimum of partially have a real-time Google algorithm is just truth. As talked about, In 2016, Google launched Penguin 4.0 which eliminated the necessity to wait for an additional model of the replace as this particular algorithm evaluates pages on a continuing foundation.

Nonetheless, exterior of Penguin, what do I imply once I say that, to an extent, we have already got a steady real-time algorithm?

The case for real-time algorithm changes

The fixed “real-time” rank changes that happen within the ecosystem are so important that they refined the volatility panorama.

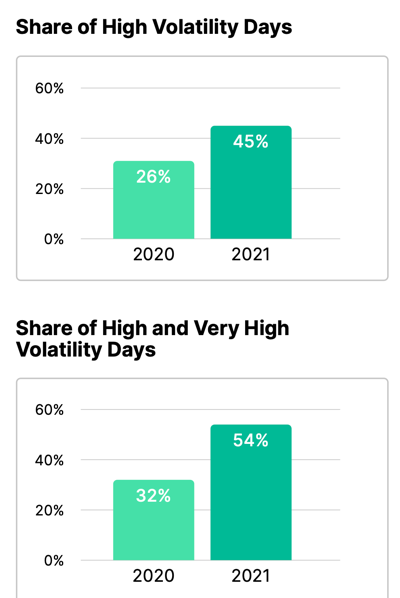

Per Semrush knowledge I pulled, there was a 58% improve within the variety of days that mirrored high-rank volatility in 2021 as in comparison with 2020. Equally, there was a 59% improve within the variety of days that mirrored both excessive or very excessive ranges of rank volatility:

Merely put, there’s a important improve within the variety of cases that replicate elevated ranges of rank volatility. After finding out these traits and searching on the rating patterns, I imagine the aforementioned rank reversals are the trigger. That means, a big portion of the elevated cases in rank volatility are coming from what I imagine to be machine studying frequently recalibrating in “real-time,” thereby producing unprecedented ranges of rank reversals.

Supporting that is the actual fact (that together with the elevated cases of rank volatility) we didn’t see will increase in how drastic the rank motion is. That means, there are extra cases of rank volatility however the diploma of volatility didn’t improve.

In reality, there was a lower in how dramatic the common rank motion was in 2021 relative to 2020!

Why? Once more, I chalk this as much as the recalibration of machine studying properties and their “real-time” influence on rankings. In different phrases, we’re beginning to see extra micro-movements that align with the pure evolution of Google’s machine-learning properties.

When a machine studying property is refined as its consumption/studying advances, you’re unlikely to see huge swings within the rankings. Moderately, you will note a refinement within the rankings that align with refinement within the machine studying itself.

Therefore, the rank motion we’re seeing, as a rule, is much extra fixed but not as drastic.

The ultimate step in the direction of steady real-time algorithm updates

Whereas a lot of the rating motion that happens is steady in that it isn’t depending on particular algorithmic refreshes, we’re not absolutely there but. As I discussed, a lot of the rank volatility is a collection of reversing rank positions. Adjustments to those rating patterns, once more, are sometimes not solidified till the rollout of an official Google replace, mostly, an official core algorithm replace.

Till the longer-lasting rating patterns are set with out the necessity to “press the button” we don’t have a full-on steady or “real-time” Google algorithm.

Nonetheless, I’ve to surprise if the pattern shouldn’t be heading towards that. For starters, Google’s Useful Content material Replace (HCU) does operate in real-time.

Per Google:

“Our classifier for this replace runs constantly, permitting it to observe newly-launched websites and current ones. Because it determines that the unhelpful content material has not returned within the long-term, the classification will now not apply.”

How is that this so? The identical as what we’ve been saying all alongside right here – Google has allowed its machine studying to have the autonomy it could should be “real-time” or as Google calls it, “steady”:

“This classifier course of is fully automated, utilizing a machine-learning mannequin.”

For the file, steady doesn’t imply ever-changing. Within the case of the HCU, there’s a logical validation interval earlier than restoration. Ought to we ever see a “really” steady real-time algorithm, this will apply in numerous methods as effectively. I don’t wish to let on that the second you make a change to a web page, there will probably be a rating response ought to we ever see a “real-time” algorithm.

On the similar time, the “conventional” formally “button-pushed” algorithm replace has grow to be much less impactful over time. In a research I carried out again in late 2021, I seen that Semrush knowledge indicated that since 2018’s Medic Replace, the core updates being launched have been changing into considerably much less impactful.

Information signifies that Google’s core updates are presenting much less rank volatility total as time goes on

Subsequently, this pattern has continued. Per my evaluation of the September 2022 Core Replace, there was a noticeable drop-off within the volatility seen relative to the Might 2022 Core Replace.

Rank volatility change was far much less dramatic through the September 2022 Core Replace relative to the Might 2022 Core Replace

It’s a twin convergence. Google’s core replace releases appear to be much less impactful total (clearly, particular person websites can get slammed simply as arduous) whereas on the similar time its newest replace (the HCU) is steady.

To me, all of it factors in the direction of Google trying to abandon the normal algorithm replace launch mannequin in favor of a extra steady assemble. (Additional proof could possibly be in how the discharge of official updates has modified. In case you look again on the numerous retailers overlaying these updates, the info will present you that the roll-out now tends to be slower with fewer days of elevated volatility and, once more, with much less total influence).

The query is, why would Google wish to go to a extra steady real-time mannequin?

Why a steady real-time google algorithm is helpful

An actual-time steady algorithm? Why would Google need that? It’s fairly easy, I believe. Having an replace that constantly refreshes rankings to reward the suitable pages and websites is a win for Google (once more, I don’t imply on the spot content material revision or optimization leading to on the spot rank change).

Which is extra useful to Google’s customers? A continuous-like updating of one of the best outcomes or periodic updates that may take months to current change?

The concept of Google constantly analyzing and updating in a extra real-time situation is just higher for customers. How does it assist a person in search of one of the best end result to have rankings that reset periodically with every new iteration of an official algorithm replace?

Wouldn’t it’s higher for customers if a website, upon seeing its rankings slip, made adjustments that resulted in some nice content material, and as an alternative of ready months to have it rank effectively, customers might entry it on the SERP far sooner?

Steady algorithmic implementation signifies that Google can get higher content material in entrance of customers far sooner.

It’s additionally higher for web sites. Do you actually take pleasure in implementing a change in response to rating loss after which having to attend maybe months for restoration?

Additionally, the truth that Google would so closely depend on machine studying and belief the changes it was making solely occurs if Google is assured in its capability to grasp content material, relevancy, authority, and so forth. SEOs and website homeowners ought to need this. It signifies that Google might rely much less on secondary alerts and extra immediately on the first commodity, content material and its relevance, trustworthiness, and so forth.

Google having the ability to extra immediately assess content material, pages, and domains total is wholesome for the net. It additionally opens the door for area of interest websites and websites that aren’t huge super-authorities (assume the Amazons and WebMDs of the world).

Google’s higher understanding of content material creates extra parity. Google shifting in the direction of a extra real-time mannequin could be a manifestation of that higher understanding.

A brand new mind-set about google updates

A steady real-time algorithm would intrinsically change the way in which we’d have to consider Google updates. It could, to a larger or lesser extent, make monitoring updates as we now know them basically out of date. It could change the way in which we have a look at search engine optimisation climate instruments in that, as an alternative of in search of particular moments of elevated rank volatility, we’d pay extra consideration to total traits over an prolonged time frame.

Based mostly on the rating traits we already mentioned, I’d argue that, to a sure extent, that point has already come. We’re already residing in an surroundings the place rankings fluctuate excess of they used to and to an extent has redefined what secure rankings imply in lots of conditions.

To each conclude and put issues merely, edging nearer to a steady real-time algorithm is a component and parcel of a brand new period in rating organically on Google’s SERP.

Mordy Oberstein is Head of search engine optimisation Branding at Wix. Mordy will be discovered on Twitter @MordyOberstein.

Subscribe to the Search Engine Watch e-newsletter for insights on search engine optimisation, the search panorama, search advertising, digital advertising, management, podcasts, and extra.

Be part of the dialog with us on LinkedIn and Twitter.