Just a few years in the past, a shopper requested me to coach a content material AI to do my job. I used to be in command of content material for a e-newsletter despatched to greater than 20,000 C-suite leaders. Every week, I curated 20 well-written, subject-matter-relevant articles from dozens of third-party publications.

However the shopper insisted that he wished the content material AI to select the articles as a substitute, with the final word purpose of absolutely automating the e-newsletter.

I used to be legitimately curious if we might do it and the way lengthy it will take. For the subsequent yr, I labored with a enterprise companion and a knowledge scientist to deconstruct what makes articles “good” and “fascinating.” Our finish consequence was… mediocre.

The AI might floor articles that have been much like ones the viewers had engaged with prior to now, chopping down the time I wanted to curate content material by about 20 %. Seems, there was a lot we might educate an AI about “good” writing (lively sentences, assorted verbs), however we couldn’t make it sensible — which is one other means of claiming we couldn’t educate it to acknowledge the ineffable nature of a contemporary thought or a dynamic means of speaking about it.

Finally my shopper pulled the plug on the AI mission and finally on the e-newsletter itself. However I’ve been excited about that have over the previous few months as giant language fashions (LLMs) like GPT-3 by OpenAI have gained broader mainstream consideration.

I’m wondering if we might have been extra profitable at the moment utilizing an API into GPT-3?

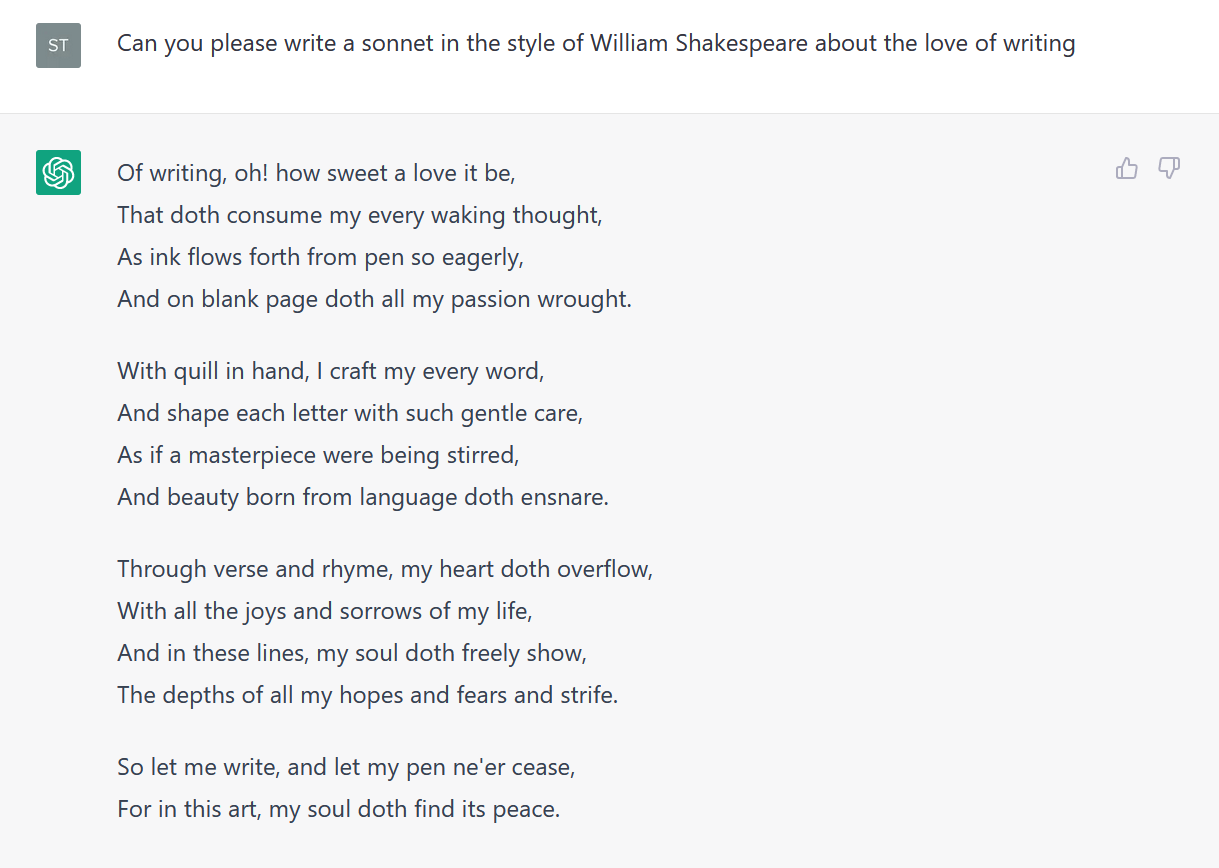

GPT-3 is the inspiration of extra acquainted merchandise like ChatGPT and Jasper, which have a formidable capacity to grasp language prompts and craft cogent textual content at lightning pace on virtually any subject.

Jasper even claims it permits groups to “create content material 10X sooner.” However the problematic grammar of getting 10X sooner at one thing (I believe they imply it takes one-tenth of the time?) highlights the destructive flip facet of content material AI.

I’ve written in regards to the superficial substance of AI-generated content material and the way these instruments usually make stuff up. Spectacular as they’re by way of pace and fluency, the massive language fashions at the moment don’t suppose or perceive the best way people do.

However what in the event that they did? What if the present limitations of content material AI — limitations that hold the pen firmly within the palms of human writers and thinkers, identical to I held onto in that e-newsletter job — have been resolved? Or put merely: What if content material AI was really sensible?

Let’s stroll by means of a couple of methods during which content material AI has already gotten smarter, and the way content material professionals can use these content material AI advances to their benefit.

5 Methods Content material AI Is Getting Smarter

To grasp why content material AI isn’t actually sensible but, it helps to recap how giant language fashions work. GPT-3 and “transformer fashions” (like PaLM by Google or AlexaTM 20B by Amazon) are deep studying neural networks that concurrently consider all the information (i.e., phrases) in a sequence (i.e., sentence) and the relationships between them.

To coach them, the builders at Open.ai, within the case of GPT-3, used net content material, which offered way more coaching information with extra parameters than earlier than, enabling extra fluent outputs for a broader set of purposes. Transformers don’t perceive these phrases, nonetheless, or what they check with on the planet. The fashions can merely see how they’re usually ordered in sentences and the syntactic relationship between them.

As a consequence, at the moment’s content material AI works by predicting the subsequent phrases in a sequence primarily based on tens of millions of comparable sentences it has seen earlier than. That is one cause why “hallucinations” — or made-up info — in addition to misinformation are so frequent with giant language fashions. These instruments are merely creating sentences that seem like different sentences they’ve seen of their coaching information. Inaccuracies, irrelevant info, debunked info, false equivalencies — all of it — will present up in generated language if it exists within the coaching content material.

And but, these will not be essentially unsolvable issues. In actual fact, information scientists have already got a couple of methods to deal with these points.

Resolution #1: Content material AI Prompting

Anybody who has tried Jasper, Copy.ai, or one other content material AI app is accustomed to prompting. Principally, you inform the software what you need to write and generally the way you need to write it. There are easy prompts — as in, “Listing the benefits of utilizing AI to write down weblog posts.”

Prompts can be extra subtle. For instance, you may enter a pattern paragraph or web page of textual content written based on your agency’s guidelines and voice, and immediate the content material AI to generate topic traces, social copy, or a brand new paragraph in the identical voice and utilizing the identical model.

Prompts are a first-line technique for setting guidelines that slender the output from content material AI. Holding your prompts targeted, direct, and particular may help restrict the possibilities that the AI will generate off-brand and misinformed copy. For extra steering, take a look at AI researcher Lance Elliot’s 9 guidelines for composing prompts to restrict hallucinations.

Resolution #2: “Chain of Thought” Prompting

Contemplate how you’d clear up a math drawback or give somebody instructions in an unfamiliar metropolis with no road indicators. You’d most likely break down the issue into a number of steps and clear up for every, leveraging deductive reasoning to search out your approach to the reply.

Chain of thought prompting leverages the same strategy of breaking down a reasoning drawback into a number of steps. The purpose is to prime the LLM to supply textual content that displays one thing resembling a reasoning or common sense pondering course of.

Scientists have used chain of thought methods to enhance LLM efficiency on math issues in addition to on extra advanced duties, reminiscent of inference — which people robotically do primarily based on their contextual understanding of language. Experiments present that with chain of thought prompts, customers can produce extra correct outcomes from LLMs.

Some researchers are even working to create add-ons to LLMs with pre-written, chain of thought prompts, in order that the typical consumer doesn’t have to learn to do them.

Resolution #3: Positive-tuning Content material AI

Positive-tuning includes taking a pre-trained giant language mannequin and coaching it to satisfy a selected activity in a selected subject by exposing it to related information and eliminating irrelevant information.

A fine-tuned information language mannequin ideally has all of the language recognition and generative fluency of the unique however focuses on a extra particular context for higher outcomes. Codex, the OpenAI by-product of GPT-3 for writing laptop code, is a fine-tuned mannequin.

There are lots of of different examples of fine-tuning for duties like authorized writing, monetary stories, tax info, and so forth. By fine-tuning a mannequin utilizing copy on authorized instances or tax returns and correcting inaccuracies in generated outcomes, a company can develop a brand new software that may reliably draft content material with fewer hallucinations.

If it appears implausible that these government-driven or regulated fields would use such untested expertise, take into account the case of a Colombian decide who reportedly used ChatGPT to draft his determination transient (with out fine-turning).

Resolution #4: Specialised Mannequin Improvement

Many view fine-tuning a pre-trained mannequin as a quick and comparatively cheap approach to construct new fashions. It’s not the one means, although. With sufficient funds, researchers and expertise suppliers can leverage the methods of transformer fashions to develop specialised language fashions for particular domains or duties.

For instance, a bunch of researchers working on the College of Florida and in partnership with Nvidia, an AI expertise supplier, developed a health-focused giant language mannequin to guage and analyze language information within the digital well being data utilized by hospitals and medical practices.

The consequence was reportedly the largest-known LLM designed to guage the content material in medical data. The workforce has already developed a associated mannequin primarily based on artificial information, which alleviates privateness worries from utilizing a content material AI primarily based on private medical data.

Resolution #5: Add-on Performance

Producing content material is commonly half of a bigger workflow inside a enterprise. So some builders are including performance on high of the content material for a better value-add.

For instance, as referenced within the part about chain of thought prompts, researchers try to develop prompting add-ons for GPT-3 in order that on a regular basis customers don’t must learn to immediate nicely.

That’s only one instance. One other comes from Jasper, which just lately introduced a set of Jasper for Enterprise enhancements in a transparent bid for enterprise-level contracts. These embody a consumer interface that lets customers outline and apply their group’s “model voice” to all of the copy they create. Jasper has additionally developed bots that permit customers to make use of Jasper inside enterprise purposes that require textual content.

One other answer supplier known as ABtesting.ai layers net A/B testing capabilities on high of language era to check totally different variants of net copy and CTAs to establish the very best performers.

Subsequent steps for Leveraging Content material AI

The methods I’ve described to this point are enhancements or workarounds of at the moment’s foundational fashions. Because the world of AI continues to evolve and innovate, nonetheless, researchers will construct AI with talents nearer to actual pondering and reasoning.

The Holy Grail of “synthetic era intelligence” (AGI) — a sort of meta-AI that may fulfill quite a lot of totally different computational duties — remains to be alive and nicely. Others are exploring methods to allow AI to interact in abstraction and analogy.

The message for people whose lives and passions are wrapped up in content material creation is: AI goes to maintain getting smarter. However we will “get smarter,” too.

I don’t imply that human creators attempt to beat an AI on the sort of duties that require huge computing energy. With the arrival of LLMs, people received’t write extra nurture emails and social posts than a content material AI anymore.

However in the interim, the AI wants prompts and inputs. Consider these because the core concepts about what to write down. And even when a content material AI surfaces one thing new and authentic, it nonetheless wants people who acknowledge its worth and elevate it as a precedence. In different phrases, innovation and creativeness stay firmly in human palms. The extra time we spend utilizing these abilities, the broader our lead.

Be taught extra about content material technique each week. Subscribe to The Content material Strategist e-newsletter for extra articles like this despatched on to your inbox.

Picture by

PhonlamaiPhoto